|

|

|

|

|

|

|

|

|

| Tip! Click on references as they appear in the text in order to see the full bibliographic records. |

|

JMM 7, Fall/Winter 2008, section 2

Javier Alejandro Garavaglia

MUSIC AND TECHNOLOGY

What Impact Does Technology Have on the Dramaturgy of Music?

2.1. Introduction

As a composer and performer of contemporary music, my principal concern when composing or performing a piece of music is the way in which it is ultimately perceived., whether or not it includes technology to some degree.[1] The subject of music dramaturgy has been treated in different ways and from different perspectives; recently Leigh Landy and later Robert Weale have carried out quite fundamental research in this area; this research is based solely, however, on sound-based music[2] rather than on music in a general and broader sense. Earlier research about the subject is sometimes problematic, as in many cases, the word dramaturgy is absent, even if that is what is at issue.[3] In any case, my activity as an active composer and performer (and as a listener as well) has led me to take a particular interest in some fundamental questions about the dramaturgy of music, for example:

- How does the relationship "creator - listener" function in a musical work?

- How does a performer interpret and transmit music to the audience?

- How does the listener perceive a piece of music? What happens in his mind?

- What type of effect does the creator want to evoke in the audience through a musical composition?

- Is there any difference between the composer’s intention and the performer’s interpretation of a piece of music, specifically in the case of interactive music?

- How does all of this affect music’s dramaturgy?

More questions could be added to this list; some will be answered briefly and in a general way in this article, as not all of them are entirely relevant to the subject at hand. The present discussion concerns the inclusion of technological devices both in the creative process and in the performance of a piece of music, as well as further analysis of whether or not technology itself may constitute a means of artistic expression, coexisting with traditional principles of aesthetics and therefore affecting music’s dramaturgy. If this is the case, it is imperative that both composers and performers reflect deeply about how the audience perceives technology (or its results, depending on each case).

2.2. Different Ways of Perceiving Technology in a Music Composition or Performance

There are some questions for which it will be important to find some answers by the end of this article. These are:

- Does technology have any influence on music’s dramaturgy at all?

- If so, is there a new kind of technological-music-dramaturgy, as technology has been included in the creative-interpretative process?

- Should technology establish its own dramaturgy or should it be disguised within "normal" musical processes?

It can be said, that at first glance, we might find two, quite opposite possibilities, both referring to the perception space[4] that technology could or could not create, concerning the whole dramatic [5] contents of a piece of music. These opposite views are:

- The audience perceives the dramatic structure of the work as a WHOLE (implying that no new perception space will be created), or

- Technology does create a new perceptual space, where it is possible to understand different levels of dramaturgy during the performance of a piece of music. Some training might be required on the part of the audience (and performers) in order to understand this new type of perception.

Unfortunately, and even if this article will try to cover a vast range of issues emerging from this problematic field, there will still be some open questions at the end, mostly due to the extreme subjectivity intrinsic to the subject of discussion. Having said this, however, there is room for substantial, theoretical discussion about the issue, in order to shed more light on the problem.

One of my major concerns (as composer and performer) is how composers and musicians deal with the subject “dramaturgy of music” in general. In recent years, I have been trying to categorise and systematise the concept of music dramaturgy in a general sense, independently of considerations attendant to any particular kind of music. I am particularly interested in the area of perception after emotions occur (see Section 3 for a proposed complete chart of the entire communication chain for music dramaturgy). A substantial number of publications have been written about the relationship between music perception and emotions (and many of them unfortunately focus only on western music from roughly around 1400-1900, regarding the the musical parameter melody as the main object of measurement for emotions, leaving most of the other parameters aside). From the point of view of music’s dramaturgy in general (the discussion is therefore not limited to the type of music dealt with in this article), I am interested in a more comprehensive analysis, which would include the perception of all types of music, regardless of genre, age, etc., and furthermore considering all or at least most of music’s parameters[6] in a quite equal manner. Electroacoustic music, as defined at the EARS website,[7] a genre for which technology is part of its very essence, remains quite relegated when it comes to analyses of how it can be perceived (and how it is perceived), despite some substantial research done in the area in recent years like The Intention/Reception Project by Weale and Landy.

2.3. Music Dramaturgy

Taking a look at the origins of the word “dramaturgy”, we can find the following options:

Etymology: German Dramaturgie, from Greek dramatourgia, dramatic composition, from dramat-, drama + -ourgia, –urgy. Date: 1801.

(a) the art or technique of dramatic composition and theatrical representation .[8]

(b) the art or technique of dramatic composition or theatrical representation. In this sense English “dramaturgy” and French “dramaturgie” are both borrowed from German Dramaturgie, a word used by the German dramatist and critic Gotthold Lessing in an influential series of essays entitled Hamburgische Dramaturgie (“The Hamburg Dramaturgy”), published from 1767 to 1769. The word is from the Greek “dramatourgía”, “a dramatic composition” or “action of a play”.[9]

We also take a closer look at the first part of this compound word, Drama:

Etymology: Late Latin dramat-, drama, from Greek, deed, drama, from dran, to do, act

1) a composition in verse or prose intended to portray life or character or to tell a story usually involving conflicts and emotions through action and dialogue and typically designed for theatrical performance: PLAY.

2) dramatic art, literature, or affairs

3) a: a state, situation, or series of events involving interesting or intense conflict of forces.

b: dramatic state, effect, or quality (“the drama of the courtroom proceedings”).[10]

As we can see, the word “dramaturgy” has its origin in the German word “Dramaturgie” and its roots can be found in the ancient Greek word dramatourgia. The main term to consider, however, should be drama: its meaning is always related to the concepts of “action” or “event”. Aristotle, in the third chapter of his On the Art of Poetry, describes drama as something “being done”.[11] The word dramaturgy implies the actual composition or “arrangement into specific proportion or relation and especially into artistic form”[12] as well as the knowledge of the rules for gathering these concepts into a (normally) known and preconceived structure (originally, the Greek tragedy was what was intended here).

Ultimately, we can define the dramaturgy of music as the way in which the creator and the listener represent in their minds the flow of a musical occurrence (that is the development of one sonic-event coming from a previous one and leading to the next), which constitutes an entity (ontologically) that as such is unique in itself, as its mental representation also might be (psychologically); however, both cases of “unique representations” might most of the time not be quite the same, as we shall see later. The series of sounds organised according to the rules of each and every musical “being” (the word “being” is here used ontologically, meaning anything that can be said to be immanently, as we cannot always refer to a composition when confronted with music-listening, especially if we consider music from cultures other than Western culture), are the events involving an “interesting or intense conflict of forces”, as seen above in one of the definitions of dramaturgy. And, as in the case of the original meaning of the word in ancient Greece, these forces do occur during a performance. The forces in place are the emotions/thoughts aroused by the sounds of the performance, which produce a mental representation of what is occurring in the piece of music: its emergent dramaturgy. Yet another additional issue is that of expectation: the brain adapts itself already during earlier stages in life, as early as inside the womb, and stores music information in long-term memory. Later in life this helps to recall well-known contours[13] learned (in the form of harmony, melody, rhythm, etc.), which can lead to expectations based upon previous knowledge, expectations of similar results in new, never heard before, but yet similar music contours. (see Levitin 2007: 104 and Ch.8). The general cultural background of each individual will influence the manner in which the music heard is imagined. If the models or contours are known to the listener, he/she can predict, and even be predisposed to understand, the dramaturgy of a given music by comparing it with previous experiences. Cognitive science describes this also as a mental schema: a framework within which the brain places (stores) standard situations, extracting those elements common to multiple experiences (Levitin 2007: 115-116). “[S]chemas.……frame our understanding; they’re the system into which we place the elements and interpretations of an aesthetic object. Schemas inform our cognitive models and expectations”. (Levitin 2007: 234). In music appreciation, familiarity (which creates the network of neurons in the brain forming the according mental schema) brings the listener’s attention to music styles that the brain might recognise or not. Even if the listener will generally not be familiar with every piece of music which is listened to, those factors onto which the brain can hold during the act of listening (as stated by Landy) might guide it to form new neural connections in order to recognise new elements with which it is, partially or totally, not familiar.

There cannot be any music dramaturgy present in the score of a piece of music (in the case of written music), but only in the actual mental representation of that music (generally across time, given by any type of performance, at least from the listener’s perspective, as we will see later). This representation occurs not only during (and even after) the actual performance, but also during the creative process.

Music happens in time, it is an "ongoing process" (and if we analyse it from the subjective point of view, time must be considered - for this purpose at least - as a relative value too). If we take a look at the definition of drama, we cannot ignore the word "event". An event is an "ongoing process" as well. The structure of every event is perceived by the "recipient" and pertinent aspects of it are saved in his memory as a certain amount of information referring to the contemplated event (for example, his own conception, "mental" snapshots, etc). This structure is the "dramaturgy" of the event. If we consider now that music is in fact an event, the description for drama given above should also be applied to music. Peirce's theory regarding phaneron is quite close to this view.[14]

This said, a musical event (or occurrence) can be considered as a continuum of sounds and breaks, a continuum which is in itself ontologically full of sense. This sense gives the outer (macro) and inner (micro) structural identity of a piece of music. If something changes this structure, the results on the recipients’s side might not be the same: the dramatic character will change and so will the manner in which it is understood.

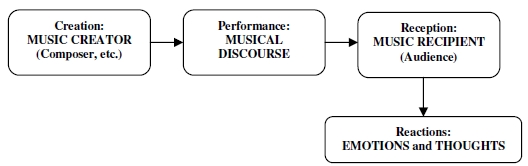

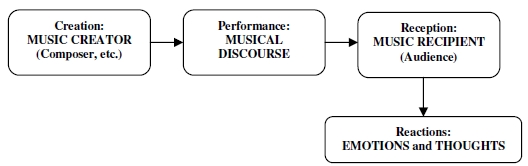

In order for music to be in a position to “express”, a communicative process has to be established. In this way the creator of a certain kind of music (generally, but not exclusively, the composer), delivers through a process (the actual performance, meant here in a broad and generic way) a musical discourse, which will be perceived by an end-recipient (generally, an audience of listeners). Research in this area uses the words “arousal” (borrowed from psychology) or “activation” to characterize the response of people to music.[15] The act of reception-perception should produce in the listener diverse reactions, which can mainly be circumscribed to emotions and thoughts. The communication chain in its first instance is represented in Figure 1 below:

Figure 1 Music’s communication chain (first instance)

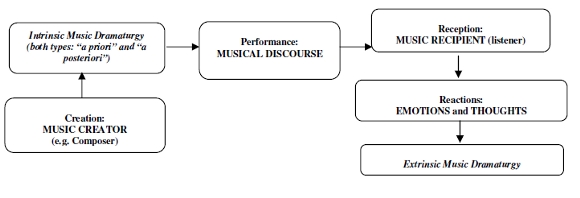

Figure 1 Music’s communication chain (first instance) Therefore two main different categories of music dramaturgy can be identified: the intrinsic (or inherent) and the extrinsic (or emergent) music dramaturgy. Let us take a detailed look at both.

| 1) | Intrinsic (or inherent) dramaturgy: the dramaturgy that the musical discourse carries within itself; it is of an objective nature (planned, however, subjectively by the music creator). Its origin can be set at the very moment of conception of any kind of music and therefore located on the creator’s side of the communication chain. It is independent of the actual passage of time, although time is intrinsically included, as any music can only “be” during time. The intrinsic type of dramaturgy can be divided into two subtypes: |

| | a) | "a priori" intrinsic dramaturgy, where the materials for the creation of the piece (the principles of the composition, which will govern the whole piece and determine its own dramaturgy) have their origin before the composition of the music itself takes place. The music tries to describe or transmit this main idea, which normally will be a literary subject such as a libretto, a storyboard, a poem, etc. In this case, the dramaturgy is preconceived and can be known (but not perceived) before the actual representation or performance of the piece. Included in this subtype are pieces (and genres) such as opera, lied, symphonic poem, etc. We can even include the sonata form here, as its rules make it more or less predictable with regard to how its progression will generally (but not specifically) be structured. |

| | b) | “a posteriori” intrinsic dramaturgy, is the type of dramaturgy that does not have a predetermined dramatic plan evident to the listener. Its origin and development are based on principles and concepts directly linked to either pure musical aspects[16] and/or complete extra-musical contexts,[17] neither of which can give the listener a clear idea (or any at all) of the dramaturgical path intended in the piece. The dramaturgy might be not preconceived at all, or at least might remain quite hidden without some extra information - i.e. any of Landy’s “something to hold on to – factors” (SHF’s) - or even an exhaustive musical analysis. In this latter case, it is hardly possible for the composer's ideas to be interpreted by the audience as these were conceived unless the listener is granted access to further information outside the sphere of the music event itself (additional explanations, programme notes, etc.).[18] In most cases, the listener might deduce a dramaturgy fully his own, without any relationship to the one conceived by its creator, disregarding even the aid given by any SHF. There is a very wide spectrum of possibilities for the “a posteriori” intrinsic dramaturgy, ranging from pieces like the Kunst der Fugue by J. S. Bach (where we have a typical case of absolute pure music, music composed for the sake of music and music theory itself, without any predetermined dramaturgic path) to some "stochastic" music, pure improvisation or even chance music. This category can even include some programmatic music like Berg’s Lyrische Suite (1926),[19] or Lutowslaski's Cello Concerto (1970),[20] where the structure might well be within the ranges of the “a priori” preconceived dramatic types (Berg’s piece is a string quartet in six movements), but both composers diffuse or even hide their extra-musical concepts within a pre-conceived (or pre-determined) structure. |

| 2) | Extrinsic (or emergent) dramaturgy: it arises solely in the listener’s mind by means of the act of listening and therefore, it is of entirely subjective nature. Circumscribed to the recipient’s side, this is the dramaturgy happening in his mind during and after the performance (the musical drama, action or event), which requires the passage of time to occur. This dramaturgy arises only through the contemplation of music, and, as we will see later, can have an impact even a long time after the actual performance has finished. This type of dramaturgy will in some cases partially (the degree is always variable for each audition and listener) coincide with the “intrinsic” type, in others, however, it may be not related to it at all. We will see later on, that in the case of technology involved in the communication chain, there will be some cases in which the emergent dramaturgy is the only dramatic possibility for certain music. The emergent dramaturgy is ultimately and absolutely a subjective act, happening in the mind of the recipient, but having, however, its source outside the subject itself, and so it might not be independent of the ideas intended by the creator of that music, as the recipient's cultural, cognitive background, capacity to understand, expectations, etc. must be taken into consideration. |

Now the graphic in Figure 1 can be completed with the whole communication chain, as shown below in Figure 2:

Figure 2 Music dramaturgy: communication chain showing both the inherent and emergent types of dramaturgy.

Figure 2 Music dramaturgy: communication chain showing both the inherent and emergent types of dramaturgy. Now the graphic in Figure 1 can be completed with the whole communication chain, as shown above in Figure 2: the figure shows that the emergent or extrinsic dramaturgy happens after emotions and/or thoughts have takes place. Listening to music can elicit mainly two different reactions, and emotions can be only one of them, even if it is the most common identified by both listeners and researchers in the area (see Sloboda 2001 for example). The other reaction can be thoughts and the ulterior reflection upon them. Some people do seem to listen to the same music through mainly one of these types of perception, although both might be present in the end, the only difference being the degree of one type compared to the other. We cannot ignore the fact that it is an absolute personal decision (conscious or not) whether or not a listener wants to get involved with music either emotionally, intellectually or both, and this can vary considerably from one situation to another. Ultimately, this does not relate directly to whether or not music dramaturgy can be perceived; given the explanations which have been offered here, perception of it should nevertheless occur in both cases.

Regarding music listening, however, emotions can be twofold, as they might predispose the music-recipient to understand the music in a particular way. They could define - or at least influence - how, during the act of listening, the intrinsic dramaturgy of the music might be perceived. On the other hand, if the listener is not (mainly) emotionally involved during the reception of a musical discourse, then thoughts invariably emerge. Even in this case, emotions might well play a role, as they might occur in a later step of the process, and therefore they could be the reaction to the understanding of that particular music. I consider this latter case of vital importance, as emotions would appear in this case not before but after the extrinsic dramaturgy has been perceived, as a consequence of the dramaturgy itself and not as an immediate reaction to the music. And those emotions might in a different way have an impact on how listening to (and expectations of) the piece take place in the future (by means of repeated listening) as if the emotions would have been the first reaction to understanding the dramaturgy. The dramaturgy that emerges in the recipient’s mind can be changing from time to time depending on his moods, cultural background, life experiences, etc. And when it changes, it might even change the type of emotion aroused while (or even after) listening. Hence, once a particular dramaturgy has been devised by the listener’s mind, emotions could be a possible further reaction to that dramaturgy, resembling (but not necessarily) those emotions that evoked that particular dramaturgy in the mind in the first place. This can be evident in cases in which the same piece of music (even in the same interpretation, as in the case of a recording) might produce different reactions in the same person at different times.[21]

Though both intrinsic and extrinsic types of dramaturgies (from outside or from inside the listener’s own mental universe) might be similar in many cases, they do not have to be (and generally will not coincide at all).[22] Since every piece of music has the power to transmit its information and to produce in the listener certain amounts of feelings and thoughts, the “recipient” takes this information and translates it in his or her own conception. This is a subjective act, which, however, has its source outside the subject itself. This process is absolutely independent from the ideas meant by the composer. And it is so subjective, that it highly depends on the recipients' cultural and cognitive background, including even the prosodic cue (conventions in language – and therefore a subject for linguistics – which determine different types of intonation in every day speech)

The most difficult task in the field of analysing music dramatics is the determination of where one type of dramaturgy begins or where the other ends. There is almost always some kind of "cross-fade" between both main types mentioned above, not only during the conceiving moment of creation (i.e. while composing), but also during the actual performance and even beyond, as we shall see in the next section.

An extraordinary example of how, from its very conception to its final performance (and beyond), both types of dramaturgies are present and work together is Luigi Nono’s PROMETEO (1984). Nono had undoubtedly a precise (intrinsic) dramaturgy in mind, but in the way the piece is performed,[23] the extrinsic (emergent) dramaturgy will work differently for each member of the audience, not only due to personal reasons (cultural background, etc.), but also due to their physical position in the concert hall during the performance. I would call this work (from the point of view of its dramaturgy) a multi-dimensional piece, as it will “fit” differently in each listener’s personal, own “universe”, reminiscent of Peirce’s phaneron.

2.4. Delalande’s Listening Behaviours

So far, we have defined two different types of music dramaturgy, intrinsic and extrinsic, each respectively with regard to the conception and perception perspectives (intention/reception) of music creation and its performance.

In his book Understanding the Art of Sound Organization, Landy (Landy 2007) quotes and explains Delalande’s six listening behaviours, which Delalande wrote about in his article “Music Analysis and Reception Behaviours: Sommeil by Pierre Henry”. (Delalande 1998) This article was based on a particular piece by Pierre Henry, belonging to the musique concrete genre. These behaviours could actually be applied to any appreciation of any type of music, however, whether or not it involves modern technology. They all occur on the part of the listener, and therefore should belong to the emergent or extrinsic dramaturgy type proposed in Section 3. They are:

- taxonomic: distinguishing the morphology of structures heard.

- empathetic: focus on immediate reactions to what is heard.

- figurativization: search for a narrative discourse within the work.

- search for a law of organization: search for (given?) structures and models.

- immersed listening: feeling part of the context while listening.

- non-listening: losing concentration or interest in listening.

In my own analysis of these behaviours, there should be a seventh added to this list: after-listening. This would be the process in our memory of past-music-listening and the recollection of the experiences both had while listening and while remembering what had been listened to before, which might alter our conception of the dramaturgy of that particular music at the next audition or even at each recollection (concurring to Multiple-Trace Memory models (MTT), as in Janata 1997). Peirce’s phaneron appears here as an obvious analogy as well. This seventh behaviour is the one that might allow the listener to have a particular approach to a particular piece of music and therefore to condition in a certain way (variable for each case, due to its utter subjectivity) future auditions of the same music (whether a different version or even the same recording). In many cases, moreover, listeners might only recall the impression (the understanding of extrinsic dramaturgy) that a piece of music made in their minds, without factually remembering any of its sound combinations (melodies, harmonies, layers, etc.).

From the point of view of music technology, behaviours number 2 and 5 might be quite relevant. Mainly the idea of “immersion” is relevant to multi-track music due to the actual “immersion” of the listener in the spatial dimension of sound, whether or not the music is acousmatic, interactive or even an installation.

All these listening behaviours do not take into consideration technology itself in the perception chain, however. I make this remark, not as a criticism of the research itself, but to point out how technology itself might be ignored in some cases, even if this could be due to the fact that the listeners who took part in Delalande’s research did not identify technology as part of their listening behaviour, or, more likely, were not asked about this, as this was not the main subject of research.

One of the main problems that both Musique concrete and the Elektronische Musik faced in the 50’s (and to a lesser degree, some other types of sound-based music since that time), in trying to reach the type of broad audiences and the attention that other types of music attracted, might have been that the presence of a living being performing was apparently missing.[24] This could in some cases directly and immediately be derived from Delalande’s sixth behaviour. In this case, technology not only would not create any new space in music perception (not to mention its dramaturgy), but would even kill the very possibility of its existence. In the case of inexperienced audiences, one of the SHF’s (as specified by Landy) might be the performer, and in the case in which the performer is one missing, a listener might consider what he is listening to as something else rather than music. The non-listening behaviour might become apparent hereby, at least in the form of not listening specifically to music: therefore dramaturgy of music cannot exist if music itself is inexistent for the potential listener.

Granted, the last 50 years have brought incredible advances, not only in technology, but also in the way we all deal with it on a daily basis (and also in the way we accept more sounds as “musical” than we did in the past). And this of course also has an impact on music primarily based on technology and subsequently on its perception. It is a paradox that a big part of this development has taken place outside so-called “serious” or “academic” music,[25] even if the related problems had their origin right at its core. This has been beneficial, nonetheless, as it has made the use of technology in music something “quite” normal for the past 50 years or so, and its perception is easier to detect, as well as its boundaries. While Stockhausen’s or Eimert’s research in pure synthetic sound in the early 50’s had an impact only on a quite moderate number of audiences, given the disassociation at that time of principally serial music from the general public (and the same applies to Musique concrete, even if not linked to any serial concept), some other technological advances in the coming decades, such as the Moog synthesizer, the sampler, or the Chowning FM principles on Yamaha synthesizers (included in the legendary DX7) had an immediate impact on a much broader audience, which shaped and changed the (mainly) pop music of their times, and in many respects, the way people listened to music (and sound itself as well) or even produced it. Examples of this should include a substantial part of pop music from the 60’s and 70’s, in particular artists such as the Beatles (i.e. introducing the Mellotron in 1966 with John Lennon’s “Strawberry Fields Forever” and incorporated on the Sgt. Pepper album in 1967); Jimi Hendrix (the three Studio albums of the Jimi Hendrix Experience, all of them introducing innovative recording techniques, new ways of using the electric guitar to produce unconventional sound – unconventional at least for the pop music scene – etc.); Pink Floyd (on albums like Meddle or Umma-Gumma, mostly by means of the use of noise and different effects on those albums), etc. Also worth mentioning here is Miles Davies’ “electro jazz-fusion” of the late 60’s and early 70’ (i.e. the album Bitches Brew).

2.5. Music Technology and Its Interaction With Music’s Dramaturgy

Let us now turn our attention to the main topic of this article: how does the reception of this kind of interaction between the piece of music and the audience work when technology is introduced into the process? Does it remain the same or does it change? And if it does change, how does it happen?

With the uninterrupted and quick development of always-new means of expression coming from the technological side, it might be wise to rethink existing aesthetic and perceptual concepts for our current “multimedia times”.

Computers nowadays offer plenty of performance options. Some of these regulate the course of a composition or improvisation through “random” or “chance” processes, some others make decisions based upon probability (i.e. stochastic algorithms). Agreed: “chance” is nothing new in the music of the past decades. To a greater or lesser degree, chance has been present in pieces by composers such as Cage or Boulez.[26] The inclusion of computer-steered algorithms during a performance (real-time DSP) is something that has been possible only since around the 90’s, however, as neither hardware nor software were previously capable of carrying out the required tasks.

This is one of the main respects in which I see a new dramatic meaning being introduced by technology: “random” events in the performance of a piece, although determined by the composer (and/or programmer), are decided in real time by the computer, however, and cannot be totally controlled by the composer (or even the performer) during performance. The composer must therefore have a precise idea of what he wants to happen with regard to the intrinsic drama of the piece if he does not want this to be chance-determined by the machine; this might, after all, ruin the composer’s intrinsic dramatic conception of the piece. In other words, the creator must programme/compose the algorithms in such a way that they produce some type of “controlled chaos”. This is of course in essence not different from the interpretation of graphic scores in the 50’s (aleatoric music); the dramaturgy of these pieces depended greatly on how they were performed. Still, the performance was put in the hands of a human being (the performer), to make his own interpretation, not entrusted to a machine.

In this case, the questions to ask are:

- from the perspective of extrinsic music dramaturgy: what happens to the audience’s perception when technology (i.e. a computer) is in charge?

- from the perspective of intrinsic music dramaturgy: which further possibilities for creative innovation, whether random or not, does technology give to composers?

In the type of composition where chance dominates both the intention and reception of most of the musical result through the use of computers, the cultural and cognitive background[27] of the audience must play a prominent role, more so than in other, more traditional types of music. If the listener relies on his/her long-term memories to expect what might come from this music, the actual results might contradict his/her expectations, and therefore the intended dramaturgy might not become apparent. Even if electroacoustic music works with pitches, durations, harmony etc., these parameters are often disguised in the form of sound-files, samples etc., created either synthetically or recorded.[28] In this case, a pertinent question might be: does an average audience perceive these parameters in the same way as pure instrumental music? I am aware that some pieces composed in the past 30 years exclusively for traditional instruments such as the violin might also present difficulties in perceiving pitches and rhythms; nevertheless, these difficulties are (due to the association music-instrument-pitch) not as great as trying to perceive, lets say, the pitch and rhythm of a water sound. Following Wittgenstein and Rosch, the cultural background of average western musical audiences will not normally include water in the category “sound-to-be-sung” for example and might relate it to its source (river, etc.) instead, depending on the morphology of the sound itself. This way of categorisation will have an impact in the way the dramaturgy is understood. There are interesting results of The Intention/Reception Project by Weale concerning inexperienced listeners (Weale 2006: 196) and acousmatic music, which I would encourage the reader to take a look at.

Moreover: how does the performer react to this? Are performers as a result of current music education programmes in a position to understand how to interpret and react in these situations, whether or not they involve fixed sounds coming from a medium like tapes or random processes running in real time from a computer? The answer to this question might not have a conclusive answer, and the answers proffered might be quite diverse. There are institutions (conservatories, etc.), which give priority to instrumental students by putting a lot of effort into introducing them to different types of new music and their technologies. Some others are more traditional in their approach. The challenges from composers who use technologies which are always renewing and updating themselves, however, constitutes a laborious task for instrumentalists who want to keep up with the latest advances in sound or mapping technology, for example. And even if this “update” takes place through the work of some very thorough and enthusiastic musicians, there is still the problem of similar or identical technologies being used in completely different ways by different composers. In my own experience, instrumentalists concentrate on some specific type of technology, but most are eager to learn new ways of playing new music. The clue seems to be in giving them a clear picture of how the performance should work, revealing to them the “intention” factor.

This said, has the computer and its associated technology become a third member in the chain "creator - receptor"?

This is indeed quite difficult to answer because technology may assume very different types of roles. For example, what happens in some cases such as interactive multimedia installations, where the audience may determine the dramaturgical development of the work of art?[29] These results are normally (depending on the degree of autonomy with which the algorithms have been programmed) quite dissimilar from case to case, even considering the same installation. Audiences in general react quite dissimilarly to the sonic results of such installations, depending on what is activated each time, how it is activated and who is interacting.

Another case, very common nowadays, is the combination of sonic-art[30] with video, working as in most cases as a solid unit. In many cases, the morphology of sound (its spectral components) is intimately related with the morphological characteristics of the visual part. A clear case of this can be seen in the work of the American composer, multi-media artist and performer Silvia Pengilly. The introduction of Jitter to the MAX software package has also brought the interaction between sound and image closer and with new and exciting possibilities, mostly in live-performance situations.

If we analyse in particular computer music and art during the last 20 years, there is evidence that technology might indeed open a new dimension in the perception of the dramaturgy of music. Let us consider, as an example, the new generation of some laptop performers, who are also in some cases the creators of the algorithms producing their music. In this case, the computer performs quite alone, with some (or sometimes no) manipulation by the performer. If some manipulation does take place, it generally does not have a big impact on the music results in the end, as this manipulation is generally of a “handling” nature rather than a true interaction, as the decisions might well be taken exclusively by means of algorithms. I am aware, that not all laptop performances are like this, but this is the type, which really makes a difference with regard to the topic of this article. Even in those cases, where the performer interacts with the computer, and even if the actual sounds from this kind of music might already be sampled or be produced by internal generators and further synthesis methods, or perhaps by some kind or interaction, the whole dramaturgy emerging from it is in most of the cases the result of how all events have been combined by a more or less acute degree of chance or randomness. Algorithms (or improvisation by the performer, or a combination of both) take control of the dramatic trajectory of the musical discourse, with an input from the performer, which could be to any degree, from none to full control. In these cases, the extrinsic or emergent dramaturgy, as defined previously, is the only dramaturgy that can really exist, as (in a higher degree than in simple improvisation), the course (or musical “discourse”) of the dramatic contents of the piece of music are not coming from a human mind, only its triggering principles (the algorithms themselves).

As a result of these considerations, we may distinguish new types of composer (or “music-creator”) categories:

(1) “composer-programmer”: since the word "composer" is at the beginning of this concatenation, the composer is thinking still in terms of musical dramaturgy, whereas the technological input is subordinated to the music. In this case, the composer uses technology within traditional boundaries. This is the case for most scored interactive music (with little or no improvisational content, where most of the dramatic steps are clearly written in music notation), such as, for example, many interactive pieces by Cort Lippe. I would include here also most of my own pieces for live-interaction with computers (see type 4 below for some examples).

(2) “programmer-composer”: here the technological input is normally at the foreground of the creation process, mostly as the result of the changes introduced by technology. It is sometimes very difficult to determine (for the composer as well as for the audience) if the musical dramaturgy of the piece is only established by the addition of running computer processes or not. This type may be clarified if we think about some multimedia installations, where the music sometimes plays (consciously) a subordinated role, as does its dramatic character. In such works of art, the dramaturgy is carried out by the whole and not by one of its parts (a case similar to that of film music). Examples of this sort can vary enormously. An early example would be Gottfried Michael Koenig’s string quartet (Streichquartett, 1959), which, even though it does not yet use technological devices for the random automatic calculation of all musical parameters, it nevertheless establishes the basis for Koenig’s future computer programmes Projekt 1 (1964) and Projekt 2 (1966) for computer-aided composition.

(3) “audience/composing-programmer”: “a priori” and “a posteriori” programmed processes interact in a new way, it being very difficult to determine where the first and where the second type of dramaturgy begins or ends. This is the case for most interactive installations, when the audience itself interacts with the production of the sonic events in time and therefore creates the dramaturgy of the particular event. The ratio of interaction audience-programmer-composer can fluidly vary to one side or the other.

(4) “interactive-performer”: in this case, the performer, with the aid of some kind of equipment on the stage (sensors, etc.), may give new dramatic sense to the piece at every new performance, acting together with his equipment as "co-creator". Although not dealt with in this article, as it would be outside of its scope, there are some issues referring to the way performers deal and interact with technology that vary quite substantially from piece to piece and from performer to performer. In many cases, performers are put under an enormous pressure, mostly by having to react to click-tracks, or having to react very frequently with the tape part, or having to react depending on what the algorithmic interaction dictates, or even having to press lots of pedals, switches, etc. This can in many cases be equally distracting for both performer and audience. In many cases, performers cannot concentrate completely on producing the music they want in the particular piece they play, due to the number of extra activities that technology dictates. And this has a direct impact on how the audience perceives the dramaturgy of the piece (or does not perceive it – in some cases Delalande’s sixth behaviour might be the only response, if the complexity of the interaction ends up obscuring the musical discourse). My personal solution to this, as a composer and performer, is the use of automation in the composing/programming stages, as I have written, for example, in my article “Live – electronics – Procesamiento del sonido en tiempo real. Perspectiva histórica – Distintos métodos y posturas”.[31] Some of the pieces in which I used this solution are: Hoquetus for Soprano saxophone and MAXMSP (2005), NINTH (music for viola and computer) (2002), Ableitungen des Konzepts der Wiederholung (for Ala) (2004), (both for Viola and MAXMSP) and more lately in Intersections (memories) for Clarinet in B (2007-8) and Farb-laut E Violet for Viola (2008), both using real-time electronics in 5.1 Surround sound with MAXMSP.

(5) “composer-technician-producer”. This is the case in which musicians use technology to change the mix of a track of music in such a way, that it is the technology itself which might be changing the entire dramatic content of that music,, for example, by making alternative mixes of some piece of music in a studio (granted, this is more frequent in pop-music, where different mixes are intended for different situations, like dance, radio-mix, etc., but in any case it does not exclude other, less commercial, types of music).

2.6. Conclusion

Regarding the technological advances of the past 20-30 years, a vast variety of sound software is nowadays in a position to create sonic results (some innovative, some not), which, however, cannot be obtained otherwise, or without using that particular technology. Therefore, the perception of technology in itself turns up to be coincidental with the musical dramatic responses and results of the music borne by such technologies. This is in itself a remarkable characteristic of technology applied to music, and in this sense, it does indeed open new frontiers for music perception and its ultimate dramaturgy. We must not forget the fact, that the reaction experienced by the audience is always different, depending on whether it is familiar with the aesthetics of the work of art or not. In this last case, it may occur that some kind of new personal experiences might be “awakened”; as seen from the perspective of neuro-science, the brain is less prepared to expect results from music in which technology might decide part or the totality of the sound and dramatic scope. Expectations might not be a factor as they usually would, (regardless of this being a matter of less experienced listeners or experienced ones), as the sonic results (and their subsequent understanding) might be new.

As we saw in Section 3, expectation can be ruptured with surprise if new elements appear (elements unknown to the listener’s brain), and depending on how they are combined in a piece of music, the schemas coming out of this appreciation might be stored in the brain and then recognised in future hearings of the same piece or even others that share similar characteristics. Technology can be one of those new elements, which can enhance expectation through, for example, an unknown or surprise factor, which will create a new perceptual space. In those cases where neither familiarity nor expectation can be aroused, it might be likely that the listener will not recognise the actual musical event as such, as in Delalande’s sixth behaviour, as explained in Section 4.

Even after the categorisation in section 5, it is still difficult to tell precisely how music technology does create a space of its own in the perception of music’s dramaturgy; the main difficulty is the dependence mostly on each subject’s listening behaviours and experiences and the way technology-based music might be consequently interpreted. Further empirical research in which composers’ and listeners’ experiences of technology-based music are shared in order to identify the impact and effect of perceived technology on dramaturgy might well be needed in order to yield deeper results.

Emergent or extrinsic dramaturgy of music will always be present, as long as the music in question has the power to arouse some kind of emotional and mental reaction in the audience. Here is an exciting aspect of the use of technology in music: it might provide new elements of perception for the audience and in turn give rise to totally new experiences.

To refer to this article: click in the target section |

Figure 1 Music’s communication chain (first instance)

Figure 1 Music’s communication chain (first instance)